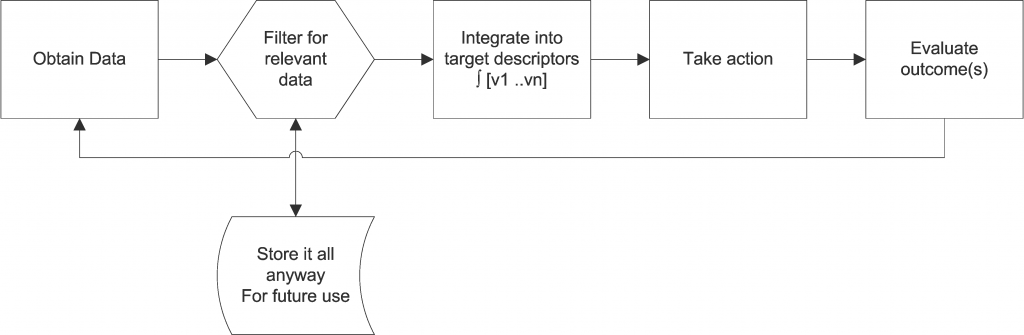

Diagram 1.

Diagram 1. Web artificial intelligence (AI) evolution is driven, in part, by the evolution of the web. Daniel Dennett, in his recent book “From Bacteria to Bach and Back,” suggests that while AIs might evolve, they will will not develop comprehension without purpose. This triggered my consideration of what “purpose” or “motive” might AIs have in the web world that could inform their evolution?

I wish to submit into evidence diagram 1. This is a simplified view of how we use the web to accomplish many tasks. First we “search.” Even Google does this behind the scenes, curating new web sites and updates to old ones. Next there is some filter operation that selects relevant content — and for some systems (Google for example), you save all of the contents anyway. Then there is an integration process where the data selected is combined with other data sources to provide the desired “more complete picture.” For our example, let’s image we want to know everything about you — property ownership, political party, personality traits, etc. We would search and filter many sites to develop our dossier on you. Then we apply the data according to our objectives and measure the result. Did you click on our ad, did you vote or not vote in the election, did you buy our product, etc. This “reward function” then feeds back into the system to inform future factors in the integration stage as well as action options, etc.

While many of these steps are currently managed by humans or directed by top-down design, many reflect goals that could be assigned to AIs. Each step likely has reward functions that would inform a deep-learning network to facilitate localized learning. The overall loop would then provide the overarching purpose and reinforcement.

Web AI Evolution is likely. So what?

We are expanding our AI capabilities to address various of these objectives, from data retrieval to advertising placement analytics. Released “into the wild,” AI systems with these objectives are likely to succeed beyond our expectations. This creates an environment where privacy intrusions and manipulation of behavior are driven by Darwinian processes at computational speeds without a moral compass. Initially we will see the objectives directed by human masters, towards the ends establish by their client/employers.

Will such systems expand beyond their design objectives? This may result from errors, from an unanticipated reinforcements, or some unexpected directive from a human source. With the distribution of software and systems on a massive scale, it is not clear that the “off switch” might stop such a transformation from spreading or affecting it’s unexpected consequences.

What directions do you think web AI evolution might take?

JOIN SSIT

JOIN SSIT