Disrupting Assumptions About Technology and Ethics in Engineering and Computing Education

While the history of technology is rife with accidents, breaches, and scandals, recent news is filled with stories that call into question not simply the actions of individuals or companies, but the nature of the technology itself. Cambridge Analytica’s data harvesting and psychological profiling, Facebook’s failure to prevent election interference via its platform, and Google’s censorship enabling collusion with authoritarian regimes can all be seen as the moral failures of malicious or negligent actors, but collectively they highlight the moral and political status of a technology-fueled social media universe in which human data is monetized, information access is controlled by a tiny circle of opaque private companies, and algorithmically amplified inflammatory rhetoric invariably trumps the truth.

In this time of massive growth in the scale and scope of technological innovations, it is more important than ever to look critically at the nature of these innovations and to challenge a naïve, techno-utopian attitude that innovation is synonymous with progress. Students in engineering and computing programs are exhorted to “disrupt” the status quo—yet, the ethical lapses we have mentioned can be traced precisely to a disruptive, “move fast and break things” approach to innovation that aims to dethrone existing markets and values to establish industry dominance [2]. In such an environment, one thing is not challenged, questioned, or disrupted: an unreflective attitude that technological change is inevitable and beneficial.

It is more important than ever to challenge a naïve, techno-utopian attitude that innovation is synonymous with progress.

We are educators tasked with engaging students in ethical inquiry at an STEM-oriented university in the United States. Alexandra is a philosopher who teaches courses in engineering ethics and philosophy of technology to students across the campus, the vast majority of whom are in STEM majors. Charles is a computer scientist who teaches a required course on social and ethical aspects of computing for students in computer science and software engineering degree programs. Despite our different backgrounds, through conversations about our ethics courses, we have identified a good deal of overlap in our goals. We both wish to shake our students out of easy attitudes about ethics: for instance, the common assumption that ethical responsibility is something that comes naturally to “good” people or that ethical analysis is primarily concerned with establishing the “correctness” of individual choice. Similarly, we wish to challenge an unexamined technological utopianism that ignores the moral dimensions of technology design and use. This is amplified by the values of “technological solutionism” and “market fundamentalism,” long ingrained in the high-tech culture, which have likewise taken root in educational instructions, curricula, and instruction [3], [4]. We find that many of our current students, aware of the high-profile moral failures of Silicon Valley, are receptive to the critique of these values; still, it is important for them to acknowledge that “nerding harder” [5] is not the appropriate answer to many ethical problems; social, legal, and policy approaches must also be considered.

We see a kind of techno-utopianism in less obvious places—even, for instance, in the resistance to recognizing limits to technological responses. For example, those who have quite understandably grown weary of Silicon Valley control have recently been calling to produce “ethical algorithms” without considering that perhaps ethics (properly understood) can’t be mechanized. Knee-jerk “fix the tech” approaches betray a kind of “techno-utopian” response insofar as they reveal a techno-monocularity that has lost the ability to even imagine real alternatives. Minimally, we want our students to come away from our classes with the sense that doing ethics is an ongoing interdisciplinary and collective praxis that necessitates venturing outside the comfort zone of technical expertise [6].

How can we provide undergraduate engineering and computing students with this thoughtful kind of disruption in a meaningful and constructive way? Reflecting on our experiences as ethics educators, we have come to think of our principal task as one of defamiliarization. In one sense, this is nothing new; it has been the central task of philosophy for over 2,500 years. Situating it within the experience of engineering and computing students, however, requires special care.

The term defamiliarization, originating in the Russian Formalist School of literary criticism, refers to the practice of challenging the audience with presentations of everyday things in uncommon ways, so as to break audiences’ habits of perception and generate new insights and perspectives [7]. In one of the readings in Charles’s class, Czech author Čapek [8] (famous for introducing the word “robot” to the world) adopts a faux-naïve attitude toward technology, extolling the virtues of his new vacuum cleaner (not to keep his house clean, but rather to produce a constant supply of “dense, uniform, heavy, and mysterious” dust in its little pouch) and his new coke stove (not to heat his house, but to provide material for the vacuum cleaner). Čapek [8] subverts a conventional functional account of technology to explore the peculiar accommodations human “owners” make for their technological “helpers,” thereby complicating the distinction between the two in a humorous and insightful way. Similarly, we aim to break students out of accepted perspectives on their technical domains and uncritical approaches to ethics and to expose them to a diversity of ontologies, epistemologies, and values.

“Nerding harder” is not the appropriate answer to many ethical problems; social, legal, and policy approaches must also be considered.

- Uprooting agency: Students’ perceptions of their relationship to technology tend to be quite simple: rational “users” interact with neutral “tools.” We aim to challenge them with a more complex notion of agency: a function of cultivated dispositions and habituated practices emerging out of practical engagement in the world. Phenomenological philosophy and science and technology studies (STS) provide empirically rich descriptions that reveal the unfounded nature of many typical assumptions about agency, assumptions that also sustain and encourage naïve views of ethics.

- Diversifying lenses: Drawing on different classical ethical theories (e.g., utilitarianism, deontology, virtue ethics, and care ethics) can help to show students both the importance and the limitations of different interpretative frames. For example, classical theories tend to be human-centered or can tacitly deploy culturally specific and uncritical value assumptions.

- Transforming identity: We challenge students to reconceive what it means to be a full-fledged professional in their area of choice. We want critical ethical engagement to become more than a sideshow or diversion, but rather an essential element of our students’ personal and professional lives.

In this article, we briefly report on our efforts to bring these concerns into our ethics courses and relate some of our students’ experiences.

Uprooting Agency

To open students’ eyes to the real scope and complexity of ethical responsibility, we must challenge the common view of human agency. An inheritance from 17th-century Cartesian philosophy, this description of human subjectivity prioritizes the individual’s use of reason without giving sufficient attention to the historical, cultural, and practical embodied habits that shape our ideas and values. Agency is not simply an individual’s “free choice” since our shared language always already directs our vision and our concern toward those things that our particular culture values and away from those which it disavows. Consequently, it is crucial for the development of critical self-reflection that students recognize the element of passivity in the agency, as paradoxical as this might initially sound [9]. The truth is that we are shaped by our particular “exterior” worldly contexts—the historical situation and the various cultures we may inhabit, including institutional, corporate, and technological cultures. Our inherited Cartesian view of human agency, as if it were an isolated “interior” consciousness, tacitly sets us up to think of ethical responsibility as primarily about individuals calculating and making personal decisions. In most cases, this is simply too reductive. First of all, engineers typically work in groups and also often in contexts of highly influential professional and corporate cultures of value that explicitly and subtly steer individual moral judgment over time. Second, and somewhat less obviously, emergent or novel ethical issues have their roots in mundane, everyday bodily practices and assumptions that do not strike most people as sites for ethical engagement and decision-making. This is one reason why engineering ethics has been dominated by dramatic “disaster case studies” [10] and also why engineering students often feel that ethics is not really a matter of immediate concern for them. As one student summed it up, “Honestly, I’m never going to be designing something as consequential as an O-ring for a space shuttle.”

Research over the past several decades in philosophy (especially phenomenology and work in the philosophy of technology that pays attention to the phenomenon of technological mediation [11]) and in the social sciences (especially STS) has given us empirically rich descriptions of the embodied everyday practices and relations with other people, technologies and technological systems through which we build skills and competencies, and form habits over time. Understanding something of the nature of these complex relations is indispensable for ethics instruction in STEM contexts. STS scholarship reveals the social, institutional, and material dimensions of technologies [12], [13]; phenomenology narrows in on the phenomenal structures of perception and interpretation that make everyday lived experiences of intersubjective life meaningful [14], [15]; and contemporary philosophy of technology, also drawing on phenomenological insights, focuses on the social, institutional, and material dimensions of everyday lived experiences with and through technologies, uncovering how these transform our moral perception and agency [16], [17]. These diverse approaches help our students to reflect on their own positions as agents emerging out of their relations within these complex historical and socio–techno situations. If we want to think about the various meanings of technologies, meanings that only emerge through our relationships with them, then we need to think of technologies as lenses through which we interpret the world. These ways of seeing, when attended to carefully, reveal tacitly enacted hierarchies of value that require critical reflection.

Doing ethics is an ongoing interdisciplinary and collective praxis that necessitates venturing outside the comfort zone of technical expertise

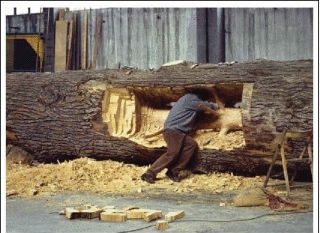

Here is a simple example that Alexandra uses in her class to demonstrate how different technologies shape what we see and therefore how we interpret and value certain aspects of the world. Imagine a huge swath of old-growth hardwood forest, perhaps from the vantage point of a helicopter flying above its towering canopy. Already your extensive vision is mediated by a host of avionic technologies networked in the cockpit, but generally speaking, you see from a kind of “imperial” perspective seeing a forest “as far as the eye can see” giving you the impression of having a grasp of the forest as a whole. Imagine now that your eye has already been “trained” by your access to an industrial sawmill. The functional scripts of these machines are certainly not “value-neutral” as they enable your imagination to envision and make possible a future in which the landscape over which you are flying is radically transformed. A forest is potentially millions of board feet of lumber stacked and waiting to be sold on the market. Modern milling technologies help to shape how we interpret a forest as a natural “resource” to be stockpiled and made available for our use [6], [7], [8], [9], [10], [11], [12], [13], [14], [15], [16], [17], [18].

Certain ways of seeing with technologies, as in the case of our industrial sawmill that encourages us to translate a forest into a resource, can occlude other ways of revealing and thus other ways of valuing. This becomes especially clear when we juxtapose our image of the industrial high-tech sawmill with the work of Italian artist Giuseppe Penone, who uses hand tools to reveal a very different story about the forest [19]. Penone’s hand tools reveal something of the life of a tree by uncovering the sapling buried within the mature tree (Figure 1). His trees seem to defy the category of “resource” since through this alternative techno-human lens, we get a glimpse of the tree’s natural history. This is not an object stacked in a lumber yard, but a work of art. While still made available for our “use” as an artwork, this tree is available in a manner that resists the instrumental, industrial lens of the sawmill, potentially provoking us to interpret both trees and forests in radically different ways.

Engaging students with this simple example disrupts two common assumptions: 1) that tools and technologies are value-neutral and 2) that individual decision is the most salient consideration when trying to determine the ethical character of human–technology relationships.

Diversifying Lenses

A standard component in many ethics curricula is a litany of classical ethical theories (deontological, utilitarian, virtue ethics, etc.) typically without much guidance on how to use them. Computer science and software engineering students, who already have strong opinions about programming languages and often identify themselves as Python, C, Java, or even Perl programmers, can be tempted to think of ethical theories in a similar way: as solutions or even identities. For instance, many students boldly proclaim themselves utilitarians—at least, until they discover the difficulties of justifying decisions based solely on utilitarian principles. We encourage them to consider different ethical theories, philosophical and sociological approaches, and methods as lenses that can give essential but inevitably incomplete views. Ethical considerations are necessarily ongoing because the worldly field is perpetually in flux; new people and new technologies mean new contexts and new ethical considerations. Ethics is ultimately about how to live in the world together and so cannot be reduced to individual moral reflection and decision. Ethical agents need to seek dialog with a diversity of other agents as well as being attentive to the values inscribed in embodied habits and material configurations.

To open students’ eyes to the real scope and complexity of ethical responsibility, we must challenge the common view of human agency.

Understanding that ethics as an ongoing orientation and commitment is key. One such approach was envisioned by van de Poel and Royakkers [20], whose Ethical Cycle framework invites students to look through a multitude of lenses—not just those crafted by Kant, Mill, and so on, but also the perspectives of their fellow students and others. The ethical cycle was conceived as a way to adequately address the “ill-structured” character of ethical judgment [20]. The model could also be used for the training of faculty, instructors, and potentially practitioners. Using a series of iterative steps, the ethical cycle encourages students to make more deeply considered judgments about real-world scenarios. The five basic steps of the cycle (moral problem statement—problem analysis—options for action—ethical action—reflection) direct students to proceed with moral problem analysis of real-life situations in a structured, iterative, and collaborative manner.

For example, while the cycle begins with a formulation of the moral problem, this problem usually only becomes clear after an inquiry into the many facets of the situation alongside considerations gleaned from various theories and methods, and after critiquing initial assumptions about who the relevant stakeholders are. Formulating a rich description of the moral problem, then, is an iterative process that will be carried over into the other steps, with students continually refining their articulation and expression of the scenario. The problem analysis step requires the student to collect as many relevant elements of the moral situation: the interests of the obvious and less obvious stakeholders, the moral values that are relevant to the situation from the perspectives of the different stakeholders, and the relevant facts. Students often find that after going through this stage, they need to reformulate their characterization of the moral problem. During the options for action step in the cycle, the student considers possible actions that might mitigate the problem or at least aspects of it. If they are considering a complex real-world scenario, they will need to creatively generate their own options for action. At this point, it is often helpful for students to engage in conversations with the stakeholders (if possible) and with classmates whose different backgrounds, experiences, and judgments can be brought into consideration.

After selecting the “best” of the possible actions, students then engage in a more formal type of ethical evaluation, putting aside for the time being, as best as they can, their intuitive reactions to consider philosophical frameworks in relation to each of the possible options for action. Traditional ethical theories like utilitarianism, deontological ethics, virtue ethics, and ethics of care are employed, not as sets of rules for generating a “right” answer, but rather as ways to illuminate issues or reveal oversights. Students may also turn to the insights from phenomenology, philosophy of technology, and STS and to think about additional relevant ethical considerations with regard to the human–technology relations at play in the scenario.

Finally, students are asked to turn to each other for collaborative reflection. The critical aim at this stage is to reflect upon, and ideally produce some coherence among, three types of moral beliefs: considered moral judgments, moral principles, and background theories, in a “wide reflective equilibrium.” According to van de Poel and Royakkers [20, p. 147], “The background theories include ethical theories, but also other relevant theories… [since these] block the possibility of simply choosing those principles that fit our considered judgments… As we see it, this process is not so much about achieving equilibrium as such, but about arguing for and against different frameworks and so achieving a conclusion that might not be covered by one of the frameworks in isolation.”

We have found that when students “walk through” some process like the ethical cycle together, they come to appreciate, in a deeper, more enduring way, the absolute centrality of dialog and critical inquiry for ethical engagement. Introducing them to ethical theories in the context of these collaborative sessions has also helped to reduce their tendency to treat them as if they were “camps” or political parties to which they must swear allegiance. Instead, they discover that the various ethical theories coupled with insights from the philosophy of technology and STS are more valuably understood to function as “defamiliarizers” and enhancers of their critical perception. We teach ethical theories to demonstrate how different foundational (metaphysical, epistemological, and normative) frameworks can significantly alter how one interprets the same situation as well as possible responses.

Transforming Identities

We want our students to adopt ethical inquiry as part of what it means to be an engineer or computer scientist. If what we do in our courses remains something outside the conception of their chosen discipline, then we have not succeeded. Finding connections to other concepts and practices that they are familiar with can help, but we must be careful not to reduce ethical inquiry to yet another “applied” skill. Rather, we encourage students to reconceive their role as a technologist, to include critical ethical inquiry as part of their job description.

There is no one right way to frame the moral problem and there is no one correct response to address the problem.

The similarities between an ethical cycle process and iterative design models in engineering are clearly apparent, and by design; indeed, an alluring aspect of the ethical cycle for engineering and computing students is that it looks like process models they have seen in their technical coursework [21], [22]. Software engineering, in particular, has seen the rise of Agile development methods, an iterative alternative to big design upfront approaches [23]. In Agile, development works in short cycles where product functionality is advanced incrementally. This approach works particularly well in contexts where customer needs are emergent or regularly changing. Agile development is not only popular in the industry, but also in academia, since it is a feasible process model for project courses: in the span of a typical academic term, there is usually just enough time to squeeze in a few iterations and give students a semirealistic project experience.

van de Poel and Royakkers [20], citing Herbert Simon, note the fundamentally “ill-structured” nature of moral problems: There is no one right way to frame the moral problem and there is no one correct response to address the problem “ … it is usually not possible to make a definitive list of all possible alternative options for action. This means that solutions are in some sense always provisional” [20, p. 136]. Students with prior agile experience have made similar provisional design steps, which are continually evaluated and compared to alternatives. While this prior experience may not entirely eliminate an attitude of “solutionism,” it can make students more receptive to the idea that interesting problems may never be truly “solved.”

Derby and Larsen [24], in their classic book on Agile, use a two-part cycle image to represent the iterative nature of Agile development (Figure 2). The left side of the cycle includes activities directly tied to technical implementation, while the right side is devoted to a retrospective, honored concept in Agile. Generating insights from prior experience and acting collaboratively and creatively to adjust the process is an essential element of Agile. Derby and Larsen [24] explain the importance of the right side of the cycle:

“Using this structure—set the stage, gather data, generate insights, decide what to do, and close the retrospective—will help your team do the following:

- Understand different points of view.

- Follow a natural order of thinking.

- Take a comprehensive view of the team’s current methods and practices.

- Allow the discussion to go where it needs to go, rather than predetermining the outcome.

- Leave the retrospective with concrete action and experiments for the next iteration”[pp. 13–14].

Looking at this passage from the perspective of ethical inquiry, we see many commonalities: 1) understanding different points of view; 2) taking a comprehensive view; and 3) allowing the discussion to go where it needs to go. But it is important to understand another essential aspect of Agile: a focus on functionality. Every iteration must result in “value for the customer.” Typically, this means fulfilling customer requirements, often called “stories.” Agile development is based on a primary directive to make customer stories come true, that is, to refine functionality toward a more satisfactory product.

Schwaber and Beedle [25] emphasize the increased efficiency of a total focus on functionality in their foundational book on the Agile methodology Scrum. The cover of the book illustrates their point (Figure 3): if your task is to read the names of the colors, writing them in distracting colors slows the process. Similarly and conversely, eliminating distractive elements and focusing on product requirements leads to a clean linear implementation. They articulate their motivation to minimize “noise” during development iterations:

Figure 3.Schwaber and Beedle’s [25] book on Scrum.

“Noise in systems development is a function of the three vectors of requirements, technology and people. If the product requirements are well known and the engineers know exactly how to build the requirements into a product using the selected technology, there is very little noise of unpredictability. The work proceeds linearly, in a straight line, with no false starts, little rework, and few mistakes” [25, p. 5].

Students experience the “flow” of separating concerns and focusing on functionality when they do Agile in project courses, and it tends to be a rewarding experience for them. But this satisfying feeling of flow does not transfer well to students engaging in the ethical cycle, where new evidence or analysis can radically shift perspectives on the moral problem at hand.

Ultimately, do the differences between critical ethical inquiry and iterative development outweigh the similarities? Does making a connection between the two breeds a false familiarity? It is true that critical ethical engagement, like Agile, is nonlinear, and reflection and consideration of other perspectives are honored in Agile. But iterations of ethical reasoning map poorly to refinements in functionality. User stories contain context but are always focused on functionality—and only certain people get to write the stories. Students are asked to eliminate “noise” in agile iteration, but that same “noise” may be crucial in ethical considerations. Critical ethical inquiry is disruptive at its core and does not sit well with a process of incremental refinement.

The ethical cycle exerts a certain pressure to converge to a “Morally Acceptable Action,” perhaps prematurely.

Student Experiences

We include some illustrative (but admittedly anecdotal) snapshots of student engagement in critical ethical analysis. We plan to conduct a more thorough qualitative analysis of student work in the near future.

In Charles’s class, students studied the U.S. Census Bureau’s decision to use differential privacy in the 2020 Census [26]. They employed the ethical cycle to address the moral questions at the heart of this issue, meeting and discussing their findings with one another in small rotating groups at each iteration. The journals of their ethical investigations reveal the power of iterative reflection with fellow students. For instance, one student starts with an underdeveloped stakeholder analysis:

“At a base level, the U.S. government is very interested in this data, as they will use it when considering how to draw voting lines and give out funds based on populations. The American people are also an important stakeholder, as it is their data that is being released in the census. Finally, any research center/individual who uses this data is a stakeholder, as they will want to make sure that this data is as accurate as possible.”

At the subsequent group reflection, however, a fellow student raises an important consideration:

“<NAME> discussed how the Census Bureau should communicate with minority groups who would be impacted the most by differential privacy, as he had further researched and found that, at least where the NCAI was concerned, the bureau had done a poor job in their communications.”

This new perspective clearly influences the development in the second iteration:

“The Bureau has argued that it is their responsibility to do everything they can to prevent specifically the data that they collect from being misused, and while this is a valid argument, I feel that it does not outweigh the damage that is being done to minority communities.”

By the time of the final reflection, the duties toward minority communities have found a central place in the student’s analysis:

“While I personally stand against the idea of differential privacy as a whole due to the fact that it misrepresents minority communities, I do understand the need for some method of privacy protection being applied to the census.”

Furthermore, group discussions precipitated thoughtful reconsiderations of their original “takes” on the problem through ethical theories:

“Regarding the Kantian perspective, my discussion group that week raised an interesting opposite view: that (to my understanding of their point) trying to prioritize a nebulous concept of “privacy” over the known, tangible positive value of accurate census data, itself seems like a Kantian violation … ”

“I decided I needed to think more on the issue, but overall still saw violating individual privacy as a more immediate/individual/direct use of humans as means, than the loss of census data. The idea of universal application (if everyone were to apply this principle) to me also argued in favor of privacy over data, to a certain extent at least.”

At the same time, there is a risk that students will view this critical consideration of ethical theories as a form of “survival of the fittest,” eliminating all but the one “correct” lens—as illustrated in this student comment:

“All in all, this is a very complex situation and no ethical lens has the perfect answer. More research needs to be done to help us eliminate certain lenses and see what outcome we should strive for.”

In an end-of-term reflection, students in Alexandra’s class note that the ethical cycle does not clearly acknowledge the agency of technology itself:

“As we see from the works of Latour, Winner, and Ihde, technology is not a neutral tool. It has the capability to transform and influence its users and unfortunately that is not a concept that users are prompted to consider in the ethical cycle.”

They point out that the concept of stakeholder does not fit well with nonhuman agents:

“We discussed among our group that if technology is a key influencer of how the work gets done it could be a stakeholder. However, we acknowledged that one of the major problems with considering technology a stakeholder is that it is not a living being.”

Furthermore, they note that the ethical cycle exerts a certain pressure to converge to a “Morally Acceptable Action,” perhaps prematurely:

“Though aware that the ethical cycle provided some degree of support for all of our potential solutions, we felt pressured to look at the number of supporting theories to make a decision between the two solutions our group seemed split between. Thus we argue, even if it is not the intended purpose of the framework, that the ethical cycle is set up in a way that encourages its users to attempt to quantify qualitative results.”

It was particularly gratifying to see students come up with a critique that had not occurred to either of us: that even a well-motivated and “open” ethical inquiry can be subverted by inequalities:

“The quality of the ethical cycle is highly dependent on the abilities and openness of the individual user. Someone with very limited knowledge of ethics may not be able to get as in-depth into the ethical theories or may not be able to communicate their ideas as clearly as someone with years of ethical knowledge and experience.”

Our selected student reflections illustrate that our “strange” take on technology can encourage students to think carefully and critically. Of course, our contact with the students is limited; an ethics course can provide exposure to critical inquiry, but incorporating it elsewhere in students’ engineering experiences would provide the continuous reinforcement needed to make it part of their engineering identity. Introducing opportunities for ethical inquiry into technical project-based courses is promising but challenging; students must be able to balance both technical and ethical cycles of development and inquiry, and engineering educators who facilitate these experiences must themselves feel comfortable with the disruptive nature of iterative ethical reasoning.

Much of the “disruption” is of course a result of the challenging of the still far too common assumption that technological and social knowledge are radically different. This epistemological hierarchy, one that posits the objectivity and purity of technological knowledge over and against social and political knowledge, is still the root of much of what ails us. As Cech’s [27, p. 45] research has shown, typical engineering education actually “fosters a culture of disengagement [insofar as it] defines public welfare concerns as tangential to what it means to practice engineering.” Repeatedly exposing students to philosophical ethics, philosophy of technology, phenomenology, and STS research that does not shy away from epistemological questions regarding the socio–techno construction of knowledge is essential for the development of students’ rich ethical engagement. So, one of the paradoxical questions we find ourselves repeatedly having to ask ourselves is how can we make engineering and computing students—and perhaps more importantly, many of their instructors—comfortable with these admittedly uncomfortable learning goals—ones that seem, at least initially to challenge the very (epistemological) supremacy of engineering and computing disciplines?

Author Information

Alexandra Morrison is an associate professor of philosophy with the Humanities Department, Michigan Technological University, Houghton, MI, USA. Her current research interests include questions of agency and ethics in human-technology relations.

Charles Wallace is an associate professor of computer science and an associate dean for curriculum and instruction with the College of Computing, Michigan Technological University, Houghton, MI, USA.

_____

To view the full version of this article, including references, click HERE.

______

![Figure 1. - Giuseppe Penone, uncovering a tree’s history [19].](https://ieeexplore.ieee.org/mediastore_new/IEEE/content/media/44/9893499/9893515/morri1-3197274-small.gif)

![Figure 2. - Cyclic representation of Agile development [24].](https://ieeexplore.ieee.org/mediastore_new/IEEE/content/media/44/9893499/9893515/morri2-3197274-small.gif)

![Figure 3. - Schwaber and Beedle’s [25] book on Scrum.](https://ieeexplore.ieee.org/mediastore_new/IEEE/content/media/44/9893499/9893515/morri3-3197274-small.gif)

JOIN SSIT

JOIN SSIT