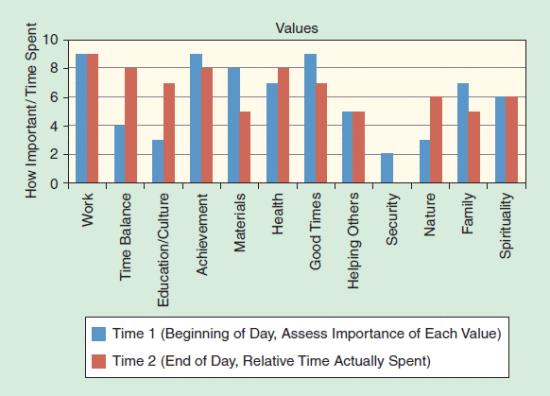

Figure 1. Sample individual survey results for the Happathon Project.

Figure 1. Sample individual survey results for the Happathon Project. What are your values? I’m asking you, the reader.

As a quick exercise, try to write down ten values you hold dear on a piece of paper. You can use words like – integrity, honesty, family, privacy, or any other value oriented word that comes to mind. However, there’s a catch: You also need to be able to prove to yourself that you live to these values every day.

In a time when artificial intelligence and autonomous systems (AI/AS) are providing more opportunities for personalization and time savings than ever before, it’s critical to pause for a moment to ask, “How will machines know what we value if we don’t know ourselves?”

This isn’t rhetorical, and the play on words is intentional. While it may come easy to criticize programmers creating the code defining AI/AS, where machines or systems that mirror human values come into play, we as individuals need to identify, test, and codify these attributes so we can best help technologists align their creations with our deeply held beliefs.

Thankfully, the rise of applied ethical considerations for artificial intelligence has become a hot topic in the past few months. The formation of the Partnership on AI working to create ethical guidelines for the corporate arena and the Asilomar Principles on AI featuring twenty three principles along these lines are just two examples of recent efforts reinforcing the need to preemptively think about values-driven issues before launching AI/AS products, versus only addressing negative unintended consequences once something is released.

This same mentality is what drove the launch of The IEEE Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems in April of 2016. A key focus for The IEEE Global Initiative is the creation and updating of Ethically Aligned Design, a document representing an early code of ethics for AI/AS created by over one hundred leading minds in AI, AS, ethics, policy, business, academia, and science. Featuring over eighty Issues from eight different committees, each section also provides Candidate Recommendations to provide directional solutions for readers.

Created to pragmatically help the creators of AI/AS in their work today, it was launched as a Request for Input so readers could actively contribute feedback in the creation of Ethically Aligned Design, version two. Utilizing critique from the RFI and responses at their recent event in Austin, TX, members of The IEEE Global Initiative will release Ethically Aligned Design, Version two, in October of this year. Plans are already in motion to continue to evolve the document in this community oriented, consensus driven process for the creation of a third and future versions of the document while seeking a broader cultural response to the Issues and Recommendations provided. The goal is that Ethically Aligned Design will be seen as a key resource globally as a code of ethics for AI/AS that will stay evergreen as its iterated once a year.

Making It About You

But now back to you and your values. What do you believe? How do you know? Are you living to your values and how can you prove that you are?

As a pragmatic tool to use for a personal experiment I’ve provided a graph (Figure 1) representing a survey I created with Dr. Margaret L. Kern, Senior Lecturer at the Center for Positive Psychology, Melbourne Graduate School of Education, Melbourne University. Peggy and I created the survey for my non-profit, The Happathon Project a few years back as a way to help people identify and track their beliefs via a methodology called, Values by Design (VBD).

I based the term after Privacy by Design, a methodology for assessing and protecting personal data created by Ann Cavoukian, Ph.D. who at the time was the Information & Privacy Commissioner of Ontario, Canada. I’ve been an advocate of personal data and privacy control for years, not in relation to one’s preferences regarding privacy (e.g., how much information you like to share about yourself on Facebook, etc) but in the vital need to provide every individual with a method for controlling and clarifying how they’d like their data to be shared.

I mention this here because identifying and stating your values to yourself and your others has to come in complement to the access to and safe sharing of your personal data.

But that’s a different article.

For now, check out Figure 1. This shows data from the survey Peggy and I created from the fifty people that participated in the three week experiment we did focused on the increase in positive wellbeing based on the identification of one’s values.

The twelve words listed on the bottom of the graph represent twelve values participants tracked for three weeks, after first identifying their “Values by Design” at the beginning of our experiment.

You can do the same. Here’s how:

- Look at all twelve values listed here and on a scale of 1–10 ask yourself, “how important is this value to my life right now?” Make a list for all twelve values. This comprises your “Values I.D.” or a portrait of the specific ways you view each of these values in your life in a holistic manner.

- At the end of the day for a week or two, look at this list again and ask yourself, “did I live to these values today?” Then, for each value, pick a number between one to ten representing how much time you spent focusing on that value. So, for instance, if you were outside all day you might say, “Nature” was a ten. If you didn’t work at all, you would list that as a “0”.

- At the end of a week (or two if you can track that long) add up your scores for each value and divide by the number of days you tracked.

Now comes the Values by Design part of your own experiment. Look at your “Values I.D.” assessment you created for yourself before you began your daily tracking and compare it with the results of what values you lived to for one or two weeks. In general, you’ll notice one or two trends happening with your data:

- You spent your time living to your values. Meaning, each day whatever number you picked roughly corresponded with which values you said were least/most important in your life overall.

- You didn’t spend time living to your values. Unless it was a unique week (travel/vacation), you didn’t focus your time on the values you indicated in your Values ID were the most important to you.

Note this is not an activity designed to be condemnatory in any way. You shouldn’t (hopefully) feel judged by the results of your tracking. The goal is to help you be introspective about the difference between what you say you believe and what you spend your time doing that may or may not reflect those beliefs. Then, by identifying the specific areas that are out of balance, you can either readjust your “Values ID” and how you think about what you hold most dear, or adjust how you spend your time to focus more directly on what you’ve identified is important to you.

While it may seem like common sense to know that living to your values can increase your happiness and wellbeing, it can be hard to do unless we examine our actions and reflect on our choices in this way.

Joining the Work

Hopefully this exercise has provided you insights into how difficult it can be to track your own actions to identify your values, let alone build code, a machine, or a system that can represent beliefs of users in their output. This is why it’s so critically important to support the technologist creating AI/AS to improve our beneficial future. If you’d like to join our work with the IEEE Global Initiative, please get in touch with me (I’m the Executive Director) and we’ll get you involved on a Committee or a Standards Working Group.

That way when you think of the question, “How will machines know what we value if we don’t know ourselves” you can work with the rest of the thought leaders trying to provide expert and thoughtful guidance to the ethical considerations guiding AI/AS into a positive and pragmatic future.

Author

JOIN SSIT

JOIN SSIT