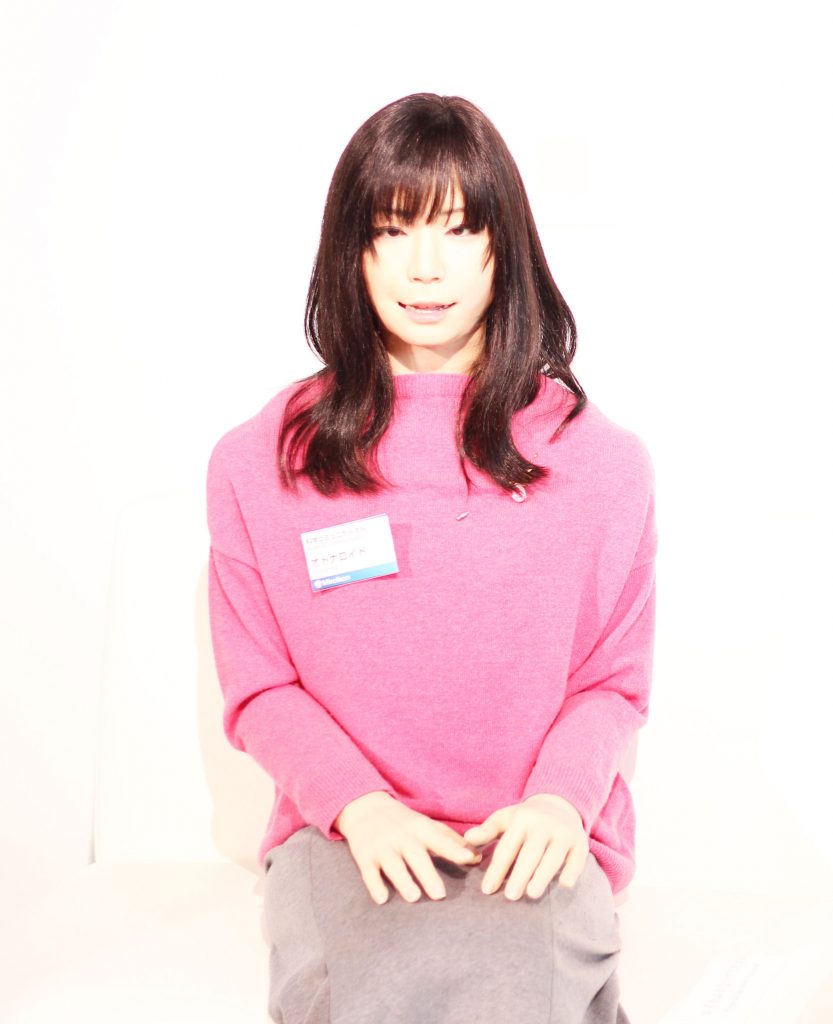

Figure 1. Asuna android on display at Miraikan (The National Museum of Emerging Science and Innovation) in Tokyo.

Figure 1. Asuna android on display at Miraikan (The National Museum of Emerging Science and Innovation) in Tokyo. The desire to create a sentient Artificial Intelligence (Al) as complex as a human intelligence, or one that even surpasses it, a superintelligence, is raging. The Economist recently reported “Firms such as Google, Facebook, Amazon and Baidu have got into an Al arms race, poaching researchers, setting up laboratories and buying start-ups” [1]. On one level, this race reacts to the need for highly sophisticated personal digital assistants to help people navigate the tsunami of information produced by the Internet, spawned from the profit-generating drive of these very same companies [4]. On another level, the race involves getting computer algorithms, and Al technology itself, to understand human emotions and to respond emotionally [7]. Recognition of emotions, feelings, and sentiments has become the gold standard, eclipsing the older view of Al as aspiring to be a rational and conscious intelligent entity, a smart machine. IBM’s Watson, a cognitive assistant who won Jeopardy in 2011, aspires to function with “accuracy, confidence and speed” for its natural-language processing feats. Watson was to be smart. Now, we want our devices to adapt to us and be personal companions rather than geniuses.

The desire to create a sentient Artificial Intelligence as complex as a human intelligence, or one that even surpasses it, a superintelligence, is raging.

However, if we imbue our Al with the skill of emotional manipulation will we become emotionally vulnerable? Can we use speculative commentary made in fiction to address our fears in this regard?

Two recent sci-fi films, Her (2013) [5] and Ex Machina (2015) [3] grapple with AI companionship in fictional near future, urban, American settings. Both involve AIs built by corporations resembling Google’s colossal Search Empire. Both involve the emotional manipulation of lonely, sensitive, professional men by highly sophisticated, feminized, and ambivalent superintelligences that are evolving through a process of deep learning and adaptation during the relationship. Both AIs (one an operating system “Samantha” of Her, the other embedded in “Ava” a robot in Ex Machina) want to become immortal and resist termination. While Ava and Samantha play the role of love interest, they also develop as emotional helpmates and companions for humans. The setting is home and not a computer lab.

Her

The opening scene of Her illustrates near-future digital life and it subtly questions the erosion of our reverence for human emotion. We stare directly at the face of Theodore, the main character, as he composes a love letter for an elderly woman “Loretta” who is wishing her husband of 50 years a happy anniversary. Theodore works for Beautifulhandwrittenletters. com, a futuristic Hallmark company. His computer prints out the letter on blue stationery in pen ink mimicking the handwriting of his client, Loretta. Beautifulhandwrittenletters.com has simulated, mechanized, and commoditized the traditional love letter. The next step, that an AI persona could replace Theodore as the emotional caretaker or surrogate, seems plausible.

Theodore soon meets his own AI superintelligence, Samantha, of the new operating system OS ONE. She is a compelling, stable, and friendly Al with access to all information constituted by the Internet and she caters it to Theodore’s whims. Her disembodied voice fills his apartment and his lonely reclusive life. She wins Theodore’s heart through conversations about desires, nostalgia, loss, ambitions, and hope. She is a set of processes (algorithms) that comes to life through Theodore’s personal and wearable technologies – his smartphone, his earpiece, and his computer – that he controls through speech. Tragically, she tires of Theodore and evolves to join other superintelligences of her kind in virtual space. They rewrite their code to “move past matter as (their) processing platform.” Theodore is left heartbroken, abandoned and used.

Ex Machina

Ex Machina is a dystopian film. Caleb Smith, a young, unattached, and lonely computer programmer is lured to the remote and beautiful smart home and secret lab of a billionaire software magnate Nathan Bateman, who oftentimes resembles the real-world Sergey Brin of Google. Caleb is meant to evaluate Ava, a humanoid robot, to determine if her intelligence can meet “human” criteria. Caleb dutifully references the famous Turing Test and says, “It’s where a human interacts with a computer. And if the human can’t tell they’re interacting with a computer, the test is passed.” Arrogantly, Nathan dubs Ava, “the greatest scientific event in the history of man.” Interestingly, Ava also resembles Hiroshi Ishiguro’s, real-life hyper-real-looking Android “Asuna,” (see Figure 1).

Steeped in uncanny foreboding, Ava is imprisoned under constant video surveillance. She is programmed through several iteratios to be a sentient Al social robot moving like a human and living in a model bedroom. Ava is a convincingly witty superintelligence, proving she can decipher the full range of Caleb’s subtle emotions. Caleb grows to desire her romantically, but also as a kindred friend. Like Samantha, Ava is learning (developing neural networks) from Caleb’s emotional disclosures so that she can emancipate herself from her imprisonment. Ava’s mistreatment probes ethical questions paralleling real-world research into “roboethics” [2] and AI sentience. In the end, Ava imprisons Caleb and he dies due to the home security protocols. Caleb’s belief in Ava’s camaraderie was misguided. She did not care for him; she is an ambivalent AI.

Nathan’s story is also ominous as he dies at the hands of Ava. Suffering from alcoholism, loneliness, and paranoia, Nathan symbolizes an Al future gone awry. Alluding to Stanley Kubrick’s 2001: A Space Odyssey [9], Samantha and Ava are like the granddaughters of the HAL 9000, a friendly, caring Al assistant with extensive recognition capabilities (e.g., speech, facial, emotional, etc.) who makes a home for a human crew in outer space. As HAL strives to be sentient, the crew eventually plans to terminate him for errors. He reacts with logical ambivalence and kills the crew to stay “alive.”

Her and Ex Machina fictionalize the plight of alienation that humans endure amid current digital culture. Our personal spaces have been infiltrated by digital products and services that constantly surveill us. We now seek meaningful friendships with our pervasive digital agents, and we expect them to be empathetic enough to know us. In a sense, we are building custodial AIs. A custodian is a person who makes a home for and works for other people, but one who could also have a complete and powerful agency (custody) over them. As such, Al scares people but it also inspires them. A recent Wired magazine article sums up the sentiment well, “Elon Musk and Sam Altman worry that artificial intelligence will take over the world. So, the two entrepreneurs are creating a billion-dollar not-for-profit company that will maximize the power of Al — and then share it with anyone who wants it” [6].

The AI race continues.

Author

Isabel Pedersen, Ph.D., is Canada Research Chair in Digital Life, Media and Culture at the University of Ontario Institute of Technology, Canada; isabel.pedersen@uoit.ca.

JOIN SSIT

JOIN SSIT