The promise of 4IR is overblown and its perils are underappreciated. There are compelling reasons to reject—and even actively oppose—the 4IR narrative.

The promise of 4IR is overblown and its perils are underappreciated. There are compelling reasons to reject—and even actively oppose—the 4IR narrative.

The Web has entered an unfair culture where big tech companies offer free applications in exchange for the right to sell our user-generated content.

PIT acknowledges that technological potential can be harnessed to satisfy the needs of civil society. In other words, technology can be seen as a public good that can benefit all, through an open democratic system of governance, with open data initiatives, open technologies, and open systems/ecosystems designed for the collective good, as defined by respective communities that will be utilizing them.

Systems can be designed using methodologies like value-sensitive design, and operationalized, to produce socio-technical solutions to support or complement policies that address environmental sustainability, social justice, or public health. Such systems are then deployed in order to promote the public interest or enable users to act (individually and at scale) in a way that is in the public interest toward individual and communal empowerment.

The fiercest public health crisis in a century has elicited cooperative courage and sacrifice across the globe. At the same time, the COVID-19 pandemic is producing severe social, economic, political, and ethical divides, within and between nations. It is reshaping how we engage with each other and how we see the world around us. It urges us to think more deeply on many challenging issues—some of which can perhaps offer opportunities if we handle them well. The transcripts that follow speak to the potency and promise of dialogue. They record two in a continuing series of “COVID-19 In Conversations” hosted by Oxford Prospects and Global Development Institute.

Disease prevention due to successful vaccination is a double-edged sword as it can give the illusion that mass vaccination is no longer warranted. Antivaccination movements are not completely absent throughout history, but for example, most recently, parents have been declining childhood vaccines at alarming levels [2, S9]. Safety concerns and misinformation seem to be at the forefront of these movements.

Just as the “autonomous” in lethal autonomous weapons allows the military to dissemble over responsibility for their effects, there are civilian companies leveraging “AI” to exert control without responsibility.

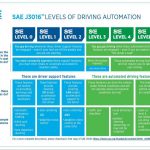

And so we arrive at “trustworthy AI” because, of course, we are building systems that people should trust and if they don’t it’s their fault, so how can we make them do that, right? Or, we’ve built this amazing “AI” system that can drive your car for you but don’t blame us when it crashes because you should have been paying attention. Or, we built it, sure, but then it learned stuff and it’s not under our control anymore—the world is a complex place.

The COVID-19 pandemic has exposed and exacerbated existing global inequalities. Whether at the local, national, or international scale, the gap between the privileged and the vulnerable is growing wider, resulting in a broad increase in inequality across all dimensions of society. The disease has strained health systems, social support programs, and the economy as a whole, drawing an ever-widening distinction between those with access to treatment, services, and job opportunities and those without.

There is huge potential for artificial intelligence (AI) to bring massive benefits to under-served populations, advancing equal access to public services such as health, education, social assistance, or public transportation, AI can also drive inequality, concentrating wealth, resources, and decision-making power in the hands of a few countries, companies, or citizens. Artificial intelligence for equity (AI4Eq) calls upon academics, AI developers, civil society, and government policy-makers to work collaboratively toward a technological transformation that increases the benefits to society, reduces inequality, and aims to leave no one behind.

From the 1970s onward, we started to dream of the leisure society in which, thanks to technological progress and consequent increase in productivity, working hours would be minimized and we would all live in abundance. We all could devote our time almost exclusively to personal relationships, contact with nature, sciences, the arts, playful activities, and so on. Today, this utopia seems more unattainable than it did then. Since the 21st century, we have seen inequalities increasingly accentuated: of the increase in wealth in the United States between 2006 and 2018, adjusted for inflation and population growth, more than 87% went to the richest 10% of the population, and the poorest 50% lost wealth .

Understanding the societal trajectory induced by AI, and anticipating its directions so that we might apply it for achieving equity, is a sociological, ethical, legal, cultural, generational, educational, and political problem.

We can perhaps accept Weil’s starting premise of obligations as fundamental concepts, based on which we can also reasonably accept her assertion that “obligations … all stem, without exception, from the vital needs of the human being.”

The issue of air pollution is a “wicked problem” — complicated by incomplete knowledge, both within the scientific community and among various stakeholders.

One of the major ways in which the development of self-driving cars has been discussed — the levels of automation drawn up by the Society of Automotive Engineers (SAE) — is misleading. A typology originally developed to provide some engineering clarity now benefits technology developers far more than it serves the public interest.

With techno-feudalism, what is paid and permitted in a digital space is decided by asymmetric power, not mutual consent. Political approval for funding priorities, education programs and regulation all favor Big Tech.

Will We Make Our Numbers? The year 2020 has a majority of the planet asking the simple question: “How do we stay alive? Competition is not working for the long-term sustainability of human and environmental well-being.

As we work to decouple carbon emissions and economic growth on the path to net zero emissions — so-called “clean growth” — we must also meaningfully deliver sustainable, inclusive growth with emerging technologies.

With more than 50% of the global population living in non-democratic states, and keeping in mind the disturbing trend to authoritarianism of populist leaders in supposedly democratic countries, it is easy to think of dystopian scenarios about the destructive potentials of digitalization and AI for the future of freedom, privacy, and human rights. But AI and digital innovations could also be enablers of a Renewed Humanism in the Digital Age.

While many of us hear about the latest and greatest breakthrough in AI technology, what we hear less about is its environmental impact. In fact, much of AI’s recent progress has required ever-increasing amounts of data and computing power. We believe that tracking and communicating the environmental impact of ML should be a key part of the research and development process.

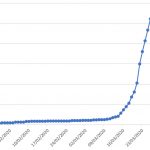

Disruptions can have positive as well as negative impacts on natural and human systems. Among the most fundamental disruptions to global society over the last century is the rise of big data, artificial intelligence (AI), and other digital technologies. These digital technologies have created new opportunities to understand and manage global systemic risks.