NYU Press

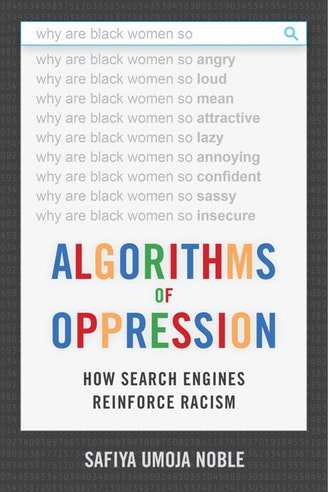

NYU Press Algorithms of Oppression is a well-researched analysis of what Noble (Assistant Professor of Information Studies at UCLA) rightly names “technological redlining.” She describes, and illustrates, how discrimination is “embedded in computer code and, increasingly, in artificial intelligence technologies that we are reliant on, by choice or not.” The book’s publication is particularly timely, given recent focused attention on the societal impact of technology companies, including related to election hacking, consumer privacy, and the psychological effect of social networks.

Noble’s book is well-illustrated with screenshots from searches that reveal the embedded racism of the Internet. (Noble’s examples run from 2010 to 2016, and she acknowledges that Google has changed its algorithm to some degree, as can be seen in some of the latter screenshots.) “Although I focus mainly on the example of Black girls to talk about search bias and stereotyping, Black girls are not the only girls and women marginalized in search.” Searches for black, Asian, Latino, Hispanic, African-American and Asian Indian girls return sickening images of pornography. As she notes, “…gender and race are socially constructed” and the undeniable underrepresentation of women and people of color in Silicon Valley is a complicating factor in the skewed narrative that digital media presents. “Filling the pipeline and holding ‘future’ Black women programmers responsible for solving the problems of racist exclusion and misrepresentation in Silicon Valley or in biased product development is not the answer.”

Noble’s book is well-illustrated with screenshots from searches that reveal the embedded racism of the Internet.

Given the ubiquity of “search” many people have the illusion that search engines return accurate, unbiased information. Google, Noble reminds us, is a commercial enterprise, not “an information resource that is working in the public domain, much like a library.” Not that libraries have an unblemished record in this regard. Information organization is never neutral but derives from “sociopolitical and historical processes that serve particular interests.”

Noble illustrates the biases of Library of Congress Subject Headings and writes about the work of radical librarian Sanford Berman, who “led the field in calling for anti-racist interventions into library catalogs in the 1970s.” Yet it took a two-year organizing effort (2014-2016) for the Library of Congress to “replace the term ‘illegal aliens’ with ‘noncitizens’ and ‘unauthorized immigrants’ in its subject headings.” As discouraging as it is to learn that the Library of Congress once classified Asian Americans as the “Yellow Peril,” at least library classification schemas are visible and correctible. Algorithms and machine learning are not.

The evidence that Noble uncovers shows how unchallenged these algorithms are. She asserts, “The problems of big data go deeper than misrepresentation, for sure. They include decision-making protocols that favor corporate elites and the powerful, and they are implicated in global economic and social inequality. Deep machine learning, which is using algorithms to replicate human thinking, is predicated on specific values from specific kinds of people — namely, the most powerful institutions in society and those who control them.” (This issue is addressed convincingly, and in great depth, in Cathy O’Neil’s important 2016 book Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy.)

Since Google derives much of its income from advertising, the ranking of search engine results is explicitly skewed to favor advertisers and not consumers. Many users have difficulty distinguishing between paid advertising and organic search results. “Search happens in a highly commercial environment, and a variety of processes shape what can be found; these results are then normalized as believable and often presented as factual.” That Google returns results in an order that favors its own financial interests is problematic, but a much more serious problem is the proliferation of hate speech.

Noble offers the case of Dylan Roof, the young man who murdered nine worshippers at the Mother Emmanuel AME Church in 2015. Trying to make sense of the Trayvon Martin case, Roof researched “black on White crime.” The results he saw were from the white nationalist Council of Conservative Christians, not the U.S. Department of Justice or the FBI. She writes, “It is critical that we think about the implications of people who are attempting to vet information in the news media about race and race relations and who are led to fascist, conservative, anti-Black, anti-Jewish, and/or White supremacist websites.”

Noble argues that the harmful effects of digital media in real life necessitate an increase in government regulation. “Attempts to regulate decency vis-a-vis racists, sexist, and homophobic harm have largely been unaddressed by the FCC, which places the onus for proving harm on the individual. I am trying to make the case …that unregulated digital platforms cause serious harm. Trolling is directly linked to harassment offline, to bullying and suicide, to threats and attacks. The entire experiment of the Internet is now with us, yet we do not have enough intense scrutiny at the level of public policy on its psychological and social impact on the public.”

Regulation also impacts the consumer’s right to privacy, what Noble calls the “value of social forgetfulness.” “These rights to become anonymous include our rights to become who we want to be, with a sense of future, rather than to be locked into the traces and totalizing effect of a personal history that dictates, through the record, a matter of truth about who we are and potentially can become.”

Algorithms of Oppression is, ultimately, a call to activism. “My hope is that the public will reclaim its institutions and direct our resources in service of a multiracial democracy. …we must fight to suspend the circulation of racist and sexist material that is used to erode our civil and human rights.” Unfortunately, Noble’s use of highly politicized language — hegemonic control, neoliberalism, persistent racialized oppression, gendered identities, etc. — is wearisome and ultimately detracts from the strength of her argument. Still, Noble’s perspective as a black feminist information professional is an important voice to listen to in this necessary and growing conversation.

Reviewer Information

Rachelle Linner, a freelance writer, has an M.S. L.I.S. from Simmons College, School of Library and Information Science. She has worked at search-related software companies for over twenty years. She can be reached at linner02128@yahoo.com.

JOIN SSIT

JOIN SSIT